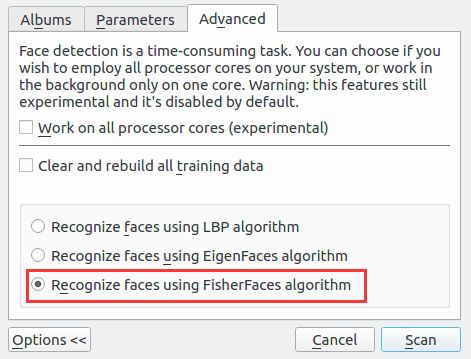

There has been 3 types of face recognition algorithms in digiKam: LBP, Eigenfaces, and Fisherfaces. Users can choose by ratio button:

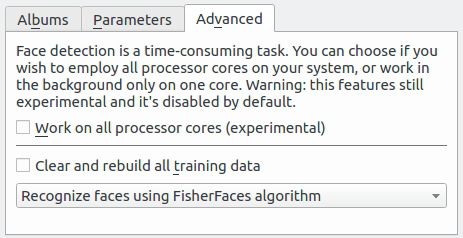

My mentor Gilles told me that it will be better to use ComboBox, because users don't have to know the details. So I changed the UI and corresponding code. The UI looks like:

Face Recognition Accuracy Improved to 99%

I have been writing and testing a new face recognition algorithm using dlib. The algorithm is about to use Deep Learning for face recognition.There has been a big improve for face recognition using deep learning method from dlib. The Accuracy can reach 99.2% with only one training image for each class, and even 100% with more trining images. First, I will show the experiment result and then I will talk about the basic idea of face recognition method which has a connection with how to implement the algorithm in DigiKam.

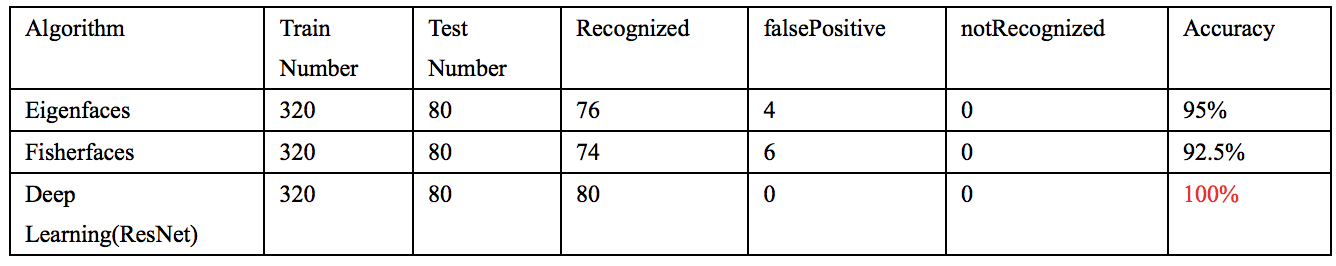

There are 40 classes of face, 10 images for each face in orl dataset.I run four rounds of experiments. 8 images of each face as training images, 2 images of each face as testing images. We got 320 in training set and 80 in testing set:

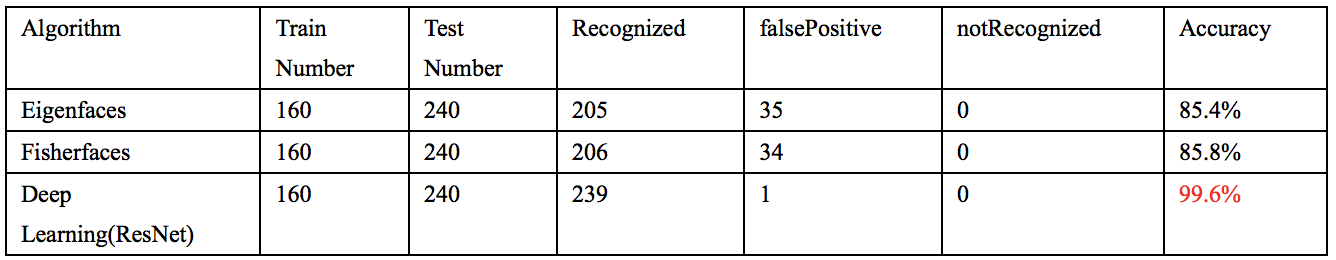

4 images of each face as training images, 6 images of each face as testing images. We got 160 in training set and 240 in testing set:

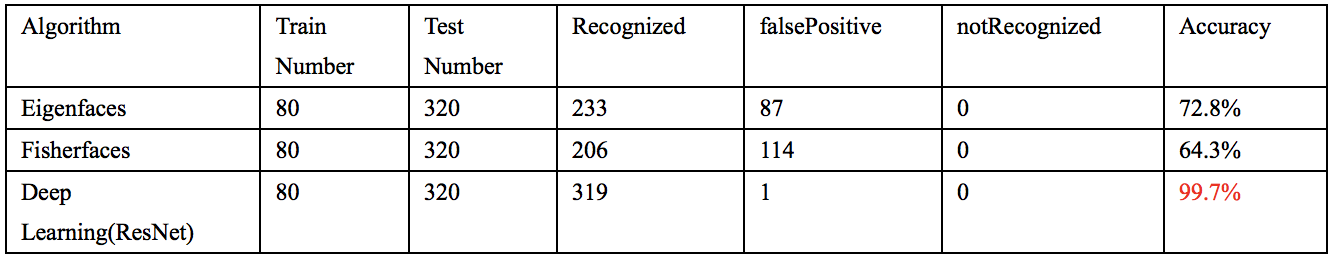

2 images of each face as training images, 8 images of each face as testing images. We got 80 in training set and 320 in testing set:

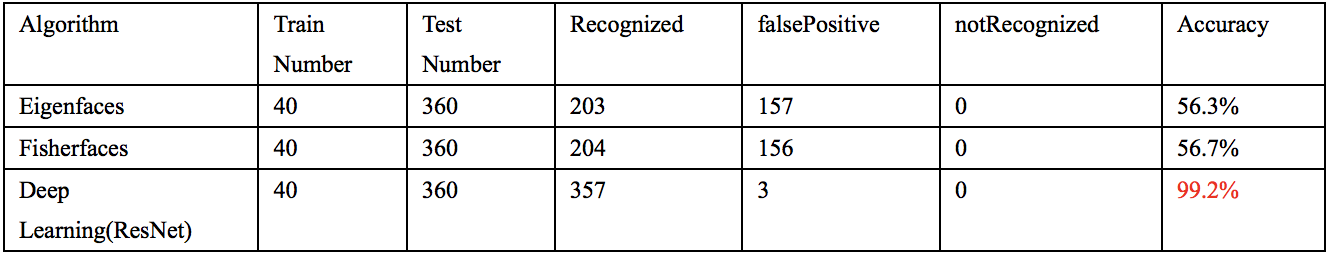

1 image of each face as training images, 9 images of each face as testing images. We got 40 in training set and 360 in testing set:

The basic idea for the algorithm is to project each face to a space in which the distance for the same faces is closer and the distance for different faces is as lager as possible. To obtain such space, a kind of convolutional neural network is used, which is called ResNet. 300 Million faces are used to train the network, and a pretrained model file is got at last.

Input a face image to the model file, and a 128 dimentional vector will got from the neural network. We calcute the distance between the vectors, and the recognize process is just like Eigenfaces and Fisherfaces.

I pushed the test code into github, there is also a readme for how to run the experiments.

Plan for Next Week

There is no dlib dependencies in digiKam, so I have to rewrite the code. The data structures for image, the class for loading pre-trained model file, the function to get 128d vector and other functions in dlib. By this way, we will be able to use the deep-learning method but independent from dlib.